2010年12月24日金曜日

[StreamGraph] Mining Large Time-evolving Data Using Matrix and Tensor Tools, SIGMOD 20007 tutorial

http://www.cs.cmu.edu/~christos/TALKS/SIGMOD-07-tutorial/

How can we find patterns in sensor streams (eg., a sequence of temperatures, water-pollutant measurements, or machine room measurements)? How can we mine Internet traffic graph over time? Further, how can we make the process incremental? We review the state of the art in four related fields: (a) numerical analysis and linear algebra (b) multi-linear/tensor analysis (c) graph mining and (d) stream mining. We will present both theoretical results and algorithms as well as case studies on several real applications. Our emphasis is on the intuition behind each method, and on guidelines for the practitioner.

[StreamGraph] 既存のインクリメンタルグラフマイニング

2. Incremental Pattern Discovery on Streams, Graphs and Tensors, 2007

2010年12月23日木曜日

[StreamGraph] インクリメンタルな Proximity 計算

http://portal.acm.org/citation.cfm?id=1390269

In this paper we investigate two aspects of ranking problems on large graphs. First, we augment the deterministic pruning algorithm in Sarkar and Moore (2007) with sampling techniques to compute approximately correct rankings with high probability under random walk based proximity measures at query time. Second, we prove some surprising locality properties of these proximity measures by examining the short term behavior of random walks. The proposed algorithm can answer queries on the fly without caching any information about the entire graph. We present empirical results on a 600, 000 node author-word-citation graph from the Citeseer domain on a single CPU machine where the average query processing time is around 4 seconds. We present quantifiable link prediction tasks. On most of them our techniques outperform Personalized Pagerank, a well-known diffusion based proximity measure.

[StreamGraph] Incremental PageRank 関連論文

http://homepages.inf.ed.ac.uk/miles/msc-projects/abdullah.pdf

Fast Incremental and Personalized PageRank

http://www.citeulike.org/user/seeding-films/article/7795698

2010年12月19日日曜日

[StreamGraph] RWR の並列化・高速化

2010年12月16日木曜日

[StreamGraph] 本プロジェクトの方向性

あえて、上記で、「リアルタイム性」という表現を使って、データストリーム処理というキーワードを使わなかったかだが、それはGIM-Vの計算モデルを、GPUのようなアクセラレータを用いて既存のバッチ処理モデルを超並列処理してリアルタイム性を実現する手法、なども考えられるからだ。また、データストリーム処理というコンテキストで言えば、(1)インクリメンタルな差分更新による処理の効率化を実現する手法、(2)ヒューリスティックス(例:雁瀬くんの二次の隔たりの特性を生かしたグラフ分割と処理の分散)を用いた性能最適化、(3)メモリに載りきらないようなグラフストリームが到着した際に Persistent な分散ファイルシステムと協調し階層的にグラフ構造を格納、頻繁にアクセスされる部分グラフのみをオンメモリ上に確保するといった最適化手法、そしてそれらのグラフデータに対して開発者からシームレスにアクセスできるような処理基盤、と言った研究テーマが考えられるでしょう。

上記の研究テーマを遂行するにあたって、まずはとっかかりとして、バッチ処理の統一的な処理モデルとして提案されているGIM-Vおよびそのリファレンス実装であるPEGASUSを軸に、上記テーマの実現手法を考えていきましょう。例えば、このブログの前の記事に Incremental PageRank の論文を載せましたが、それをPEGASUS上(もしくは System S 上で)で実装してみて、GIM-V,プログラミングモデル、処理系の gap を分析し、上記(2)や(3)((1)もできれば)を自然に表現できるモデルを提案できると良い研究成果につながると思います。

2010年12月15日水曜日

[StreamGraph] 時間的に変化する動的グラフに関する研究

Mining Temporally Evolving Graphs, 2004

http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.103.6282

>Web mining has been explored to a vast degree and different techniques have been proposed for a variety of applications that include Web Search, Web Classification, Web Personalization etc. Most research on Web mining has been from a ‘data-centric ’ point of view. The focus has been primarily on developing measures and applications based on data collected from content, structure and usage of Web till a particular time instance. In this project we examine another dimension of Web Mining, namely temporal dimension. Web data has been evolving over time, reflecting the ongoing trends. These changes in data in the temporal dimension reveal new kind of information. This information has not captured the attention of the Web mining research community to a large extent. In this paper, we highlight the significance of studying the evolving nature of the Web graphs. We have classified the approach to such problems at three levels of analysis: single node, sub-graphs and whole graphs. We provide a framework to approach problems of this kind and have identified interesting problems at each level. Our experiments verify the significance of such analysis and also point to future directions in this area. The approach we take is generic and can be applied to other domains, where data can be modeled as graph, such as network intrusion detection or social networks.

[StreamGraph] インクリメンタルなネットワーク分析手法

2010年12月14日火曜日

[Data Distribution] 投稿予定

| Technical Papers Due: | 17 January 2011 |

| PAPER DEADLINE EXTENDED: | 24 January 2011 at 12:01 PM (NOON) Eastern Time |

| Author Notifications: | 28 February 2011 |

| Paper Submission: | January 31, 2011 |

| Paper Submission to highlights track: | March 4, 2011 |

| Paper Notification: | March 21, 2011 |

[StreamCloud] 学会投稿予定

[StreamGraph] Graph 500 Update

2010年12月10日金曜日

[StreamGraph] SNAP (Stanford Network Analysis Package)

[StreamGraph] Jon Kleinberg's Survey

- Survey Paper

- J. Kleinberg. Temporal Dynamics of On-Line Information Streams. Draft chapter for the forthcoming book Data Stream Management: Processing High-Speed Data Streams (M. Garofalakis, J. Gehrke, R. Rastogi, eds.), Springer.

- Survey Paper

- Short Time Scales: Usage Data and Bursty Dynamics

- L.R. Rabiner. A tutorial on hidden Markov models and selected applications in speech recognition. In Proc. IEEE, Vol. 77, No. 2, pp. 257-286, Feb. 1989

- J. Allan, J.G. Carbonell, G. Doddington, J. Yamron, Y. Yang, Topic Detection and Tracking Pilot Study: Final Report. Proc. DARPA Broadcast News Transcription and Understanding Workshop, Feb. 1998.

- R. Swan, J. Allan, Automatic generation of overview timelines. Proc. SIGIR Intl. Conf. on Research and Development in Information Retrieval, 2000.

- S. Havre, B. Hetzler, L. Nowell, ThemeRiver: Visualizing Theme Changes over Time. Proc. IEEE Symposium on Information Visualization, 2000.

- J. Kleinberg. Bursty and Hierarchical Structure in Streams. Proc. 8th ACM SIGKDD Intl. Conf. on Knowledge Discovery and Data Mining, 2002.

- J. Aizen, D. Huttenlocher, J. Kleinberg, A. Novak. Traffic-Based Feedback on the Web. Proceedings of the National Academy of Sciences 101(Suppl.1):5254-5260, 2004.

- R. Kumar, J. Novak, P. Raghavan, A. Tomkins. On the bursty evolution of Blogspace. Proc. International WWW Conference, 2003.

- Y. Zhu and D. Shasha. Efficient Elastic Burst Detection in Data Streams. Proc. ACM SIGKDD Intl. Conf. on Knowledge Discovery and Data Mining, 2003.

- E. Gabrilovich, S. Dumais, E. Horvitz. NewsJunkie: Providing Personalized Newsfeeds via Analysis of Information Novelty. Proceedings of the Thirteenth International World Wide Web Conference. May 2004.

- M. Vlachos, C. Meek, Z. Vagena, D. Gunopulos. Identifying Similarities, Periodicities and Bursts for Online Search Queries. Proc. ACM SIGMOD International Conference on Management of Data, 2004.

- A..-L. Barabasi. The origin of bursts and heavy tails in human dynamics. Nature 435, 207-211 (2005).

- Micah Dubinko, Ravi Kumar, Joseph Magnani, Jasmine Novak, Prabhakar Raghavan, Andrew Tomkins. Visualizing Tags over Time. WWW2006 Conference. See also the demo of flickr tag visualization.

- Xuerui Wang and Andrew McCallum. Topics over Time: A Non-Markov Continuous-Time Model of Topical Trends. Conference on Knowledge Discovery and Data Mining (KDD) 2006.

- Xuanhui Wang, ChengXiang Zhai, Xiao Hu, and Richard Sproat, Mining Correlated Bursty Topic Patterns from Coordinated Text Streams. Proceedings of the 2007 ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD'07 ), pages 784-793.

- Short Time Scales: Usage Data and Bursty Dynamics

Temporal Analysis and Bursty Phenomena

- J. Leskovec, L. Backstrom, J. Kleinberg. Meme-tracking and the dynamics of the news cycle. Proc. 15th ACM SIGKDD Intl. Conf. on Knowledge Discovery and Data Mining, 2009.

- See also the commpanying MemeTracker site, which uses the ideas in this paper to visualize the news cycle.

- L. Backstrom, J. Kleinberg, R. Kumar. Optimizing Web traffic via the Media Scheduling Problem. Proc. 15th ACM SIGKDD Intl. Conf. on Knowledge Discovery and Data Mining, 2009.

- J. Kleinberg. Temporal Dynamics of On-Line Information Streams. In Data Stream Management: Processing High-Speed Data Streams, (M. Garofalakis, J. Gehrke, R. Rastogi, eds.), Springer, 2004.

- J. Aizen, D. Huttenlocher, J. Kleinberg, A. Novak. Traffic-Based Feedback on the Web. Proceedings of the National Academy of Sciences 101(Suppl.1):5254-5260, 2004. (Also available in pre-print form.)

- J. Kleinberg. Bursty and Hierarchical Structure in Streams. Proc. 8th ACM SIGKDD Intl. Conf. on Knowledge Discovery and Data Mining, 2002.

- See also some sample results from the burst detection algorithm described in this paper.

[StreamGraph] スタンフォード大の公開データ

[StreamGraph] Facebook Data

[StreamGraph] Livedoor Data

[StreamGraph] データストリームに対する相関ルールを用いたコミュニティの時系列解析

Body sensor data processing using stream computing

Body sensor data processing using stream computing

2010年12月9日木曜日

2010年12月8日水曜日

[StreamGraph] 食べログ

[StreamGraph] Mixi Graph API

[StreamGraph] Facebook Graph API

Facebook Graph API を用いれば実データは取れるそうだ。

------

The announcement was glossed over during Facebook’s many launches at f8 yesterday, but the company has introduced ways for developers to conduct a variety of searches for content in its Graph API. This could benefit any number of search companies and other developers who are looking for lots of public data to aggregate and organize.

The Open Graph allows applications, Pages, web sites and other software services to add Facebook features, like the “Like” button, to their own sites. Taking actions on other sites results in those actions being shared with your friends on Facebook, and may allow friends on those sites to see what you’re doing, too.

Many users have their privacy settings configured to allow status updates, likes and other activity to be public. Its terminology for this data is “public objects.” The generic search format is: https://graph.facebook.com/search?q=QUERY&type=OBJECT_TYPE.

Here are the specific types of searches that can be done:

News feeds of individuals can be searched, too, but you’ll need authorization. Here’s an example:

News Feed: https://graph.facebook.com/me/home?q=facebook

This search is restricted to public data about users and their immediate friends (not, say, their friends of friends), in keeping with Facebook’s existing data-sharing policies.

2010年12月7日火曜日

[StreamGraph] グラフアルゴリズムの GPU 化

2010年12月3日金曜日

[StreamGraph] Mixi Graph API

インターネットコンテンツの扱いに関して

[StreamGraph] Netflix Challenge

2010年12月2日木曜日

各種ネット企業の Web サービス API

[StreamGraph] Social Graph API

2010年11月30日火曜日

2010年11月25日木曜日

VLDB 2010

Dawei Jiang (National University of Singapore, Republic of Singapore), Beng Chin Ooi (National University of Singapore, Republic of Singapore), Lei Shi (National University of Singapore, Republic of Singapore), Sai Wu (National University of Singapore, Republic of Singapore)

Willis Lang (University of Wisconsin-Madison, United States of America), Jignesh Patel (University of Wisconsin-Madison, United States of America)

Bogdan Alexe (University of California Santa Cruz, United States of America), Mauricio Hernández (IBM Research, United States of America), Lucian Popa (IBM Almaden Research Center, United States of America), Wang-Chiew Tan (University of California Santa Cruz, United States of Americ

Wook-Shin Han (Kyungpook National University, Republic of Korea), Jinsoo Lee (Kyungpook National University, Republic of Korea), Minh-Duc Pham (Kyungpook National University, Republic of Korea), Jeffrey Yu (The Chinese University of Hong Kong, People’s Republic of China)

Peixiang Zhao (University of Illinois at Urbana-Champaign, United States of America), Jiawei Han (University of Illinois at Urbana-Champaign, United States of America)

Sergey Melnik (Google, United States of America), Andrey Gubarev (Google, United States of America), Jing Jing Long (Google, United States of America), Geoffrey Romer (Google, United States of America), Shiva Shivakumar (Google, United States of America), Matt Tolton (Google, United States of America), Theo Vassilakis (Google, United States of America)

Yingyi Bu (University of Washington, United States of America), Bill Howe (University of Washington, United States of America), Magdalena Balazinska (University of Washington, United States of America), Michael Ernst (University of Washington, United States of America)

p.264: Graph Pattern Matching: From Intractable to Polynomial Time

Wenfei Fan (University of Edinburgh, United Kingdom), Jianzhong Li (Harbin Institute of Technology, People’s Republic of China), Shuai Ma (University of Edinburgh, United Kingdom), Nan Tang (University of Edinburgh, United Kingdom), Yinghui Wu (University of Edinburgh, United Kingdom), Yunpeng Wu (National University of Defense Technology, People’s Republic of China)

p.276: GRAIL: Scalable Reachability Index for Large Graphs

Hilmi Yildirim (Rensselaer Polytechnic Institute, United States of America), Vineet Chaoji (Yahoo! Research Labs, United States of America), Mohammed Zaki (Rensselaer Polytechnic Institute, United States of America

p.220: High-Performance Dynamic Pattern Matching over Disordered Streams

Badrish Chandramouli (Microsoft Research, United States of America), Jonathan Goldstein (Microsoft Research, United States of America), David Maier (Portland State University, United States of America)

p.232: SECRET: A Model for Analysis of the Execution Semantics of Stream Processing Systems

Irina Botan (Eidgenössische Technische Hochschule Zürich, Switzerland), Roozbeh Derakhshan (Eidgenössische Technische Hochschule Zürich, Switzerland), Nihal Dindar (Eidgenössische Technische Hochschule Zürich, Switzerland), Laura Haas (IBM Almaden Research Center, United States of America), Renée Miller (University of Toronto, Canada), Nesime Tatbul (Eidgenössische Technische Hochschule Zürich, Switzerland)

p.244: Recognizing Patterns in Streams with Imprecise Timestamps

Haopeng Zhang (University of Massachusetts Amherst, United States of America), Yanlei Diao (University of Massachusetts Amherst, United States of America), Neil Immerman (University of Massachusetts Amherst, United States of America)

2010年11月17日水曜日

2010年11月16日火曜日

プロジェクト体制のまとめ

GPU における ゼロコピー通信

[StreamGraph] ターゲットアプリケーション

- グラフの中心性 (HITS, PageRank,マルコフ中心性)

- クラスタリング(Betweennessアルゴリズム, KMeans, ...) 、セミクラスタリング

- Triangle の抽出

- 2部グラフの最大マッチング

- 最短経路問題

- グラフ直径

- ...

[StreamGraph] Jung

2010年11月15日月曜日

国内学会

2010年11月8日月曜日

[StreamGraph] Related Papers

[StreamGraph] Random Walk with Restart

[StreamGraph] Triangle Law

The co-authorship network of scientists represents a prototype of complex evolving networks. In

addition, it offers one of the most extensive database to date on social networks. By mapping the

electronic database containing all relevant journals in mathematics and neuro-science for an eightyear period (1991-98), we infer the dynamic and the structural mechanisms that govern the evolution and topology of this complex system. Three complementary approaches allow us to obtain a detailed characterization. First, empirical measurements allow us to uncover the topological measures that characterize the network at a given moment, as well as the time evolution of these quantities.

The results indicate that the network is scale-free, and that the network evolution is governed by

preferential attachment, affecting both internal and external links. However, in contrast with most model predictions the average degree increases in time, and the node separation decreases. Second, we propose a simple model that captures the network’s time evolution. In some limits the model can be solved analytically, predicting a two-regime scaling in agreement with the measurements. Third, numerical simulations are used to uncover the behavior of quantities that could not be predicted analytically. The combined numerical and analytical results underline the important role internal links play in determining the observed scaling behavior and network topology. The results and methodologies developed in the context of the co-authorship network could be useful for a systematic study of other complex evolving networks as well, such as the world wide web, Internet,or other social networks.

Structure of a large social network. Barabashi, et.al, 2003

We study a social network consisting of over 104 individuals, with a degree distribution exhibiting

two power scaling regimes separated by a critical degree kcrit, and a power law relation between

degree and local clustering. We introduce a growing random model based on a local interaction

mechanism that reproduces all of the observed scaling features and their exponents. Our results

lend strong support to the idea that several very different networks are simultenously present in the human social network, and these need to be taken into account for successful modeling

2010年11月7日日曜日

[StreamGraph] Fast Counting of Triangles in Large Real Networks: Algorithms and Laws

Fast Counting of Triangles in Large Real Networks: Algorithms and Laws

How can we quickly nd the number of triangles in a large graph, without actually counting them? Triangles are important for real world social networks, lying at the heart of the clustering coe cient and of the transitivity ratio. However, straight-forward and even approximate counting algorithms can be slow, trying to execute or approximate the equivalent of a 3-way

database join. In this paper, we provide two algorithms, the Eigen-Triangle for counting the total number of triangles in a graph, and the EigenTriangleLocal algorithm that gives the count of triangles that contain a desired node. Additional contributions include the following: (a) We show that both algorithms achieve excellent accuracy, with up to 1000x faster execution time, on several, real graphs and (b) we discover two new power laws ( Degree-Triangle and TriangleParticipa-

tion laws) with surprising properties.

EigenSpokes: Surprising Patterns and Scalable Community Chipping in Large Graphs

通話記録からのソーシャルグラフのパターン検知

EigenSpokes: Surprising Patterns and Scalable Community Chipping in Large Graphs

We report a surprising, persistent pattern in large sparse social graphs, which we term EigenSpokes. We focus on large Mobile Call graphs, spanning about 186K nodes and millions of calls, and find that

the singular vectors of these graphs exhibit a striking EigenSpokes pattern wherein, when plotted against each other, they have clear, separate lines that often neatly align along specific axes (hence the term “spokes”). Furthermore, analysis of several other real-world datasets e.g., Patent Citations, Internet, etc. reveals similar phenomena indicating this to be a more fundamental attribute of large sparse graphs that is related to their community structure. This is the first contribution of this paper. Additional ones include (a) study of the conditions that lead to such EigenSpokes, and (b) a fast algorithm for spotting and extracting tightly-knit communities, called

SpokEn, that exploits our findings about the EigenSpokes pattern

MapReduce 上のグラフアルゴリズム

http://www.umiacs.umd.edu/~jimmylin/publications/Lin_Schatz_MLG2010.pdf

Jimmy Lin, Michael Schatz, University of Maryland

Graphs are analyzed in many important contexts, including ranking search results based on the hyperlink structure of the world wide web, module detection of protein-protein interaction networks, and privacy analysis of social networks. MapReduce provides an enabling technology for large-scale graph processing. However, there appears to be a paucity of knowledge on designing scalable graph algorithms. Existing best practices for MapReduce graph algorithms have significant shortcomings that limit performance, especially with respect to partitioning, serializing, and distributing the graph. We present three design patterns that address these issues and can be used to accelerate a large class of graph algorithms based on message passing, exemplified by PageRank. Experiments show that the application of our design patterns reduces the running time of PageRank on a web graph with 1.4 billion edges by 69%.

Graph Processing with MapReduce

http://horicky.blogspot.com/2010/07/graph-processing-in-map-reduce.html

Twister: Iterative MapReduce

Twister http://www.iterativemapreduce.org/

[StreamGraph] Extended Library for Graph Processing

http://www.springerlink.com/content/6drvfrkj7uu4hq0a/

We present a software library , that enables practice-oriented experimentation with huge data sets. is an implementation of the C++ standard template library STL for external memory computations. It supports parallel disks, overlapping between I/O and computation, and pipelining technique that can save more than half of the I/Os. has already been used for computing minimum spanning trees, connected components, breadth-first search decompositions, constructing suffix arrays, and computing social network analysis metrics.

Building a Parallel Pipelined External Memory Algorithm Library

Large and fast hard disks for little money have enabled the processing of huge amounts of data on a single machine. For this purpose, the well-established STXXL library provides a framework for external memory algorithms with an easy-to-use interface. However, the clock speed of processors

cannot keep up with the increasing bandwidth of parallel disks, making many algorithms actually compute-bound. To overcome this steadily worsening limitation, we exploit today’s multi-core processors with two new approaches. First, we parallelize the internal computation

of the encapsulated external memory algorithms by utilizing the MCSTL library. Second, we augment the unique pipelining feature of the STXXL, to enable automatic task parallelization.

We show using synthetic and practical use cases that the combination of both techniques increases performance

greatly.

http://algo2.iti.kit.edu/singler/publications/parallelstxxl-ipdps2009.pdf

2010年11月6日土曜日

[StreamGraph] Max-Cover in Map-Reduce

http://research.google.com/pubs/pub36582.html

The NP-hard Max-k-cover problem requires selecting k sets from a collection so as to maximize the size of the union. This classic problem occurs commonly in many settings in web search and advertising. For moderately-sized instances, a greedy algorithm gives an approximation of (1-1/e). However, the greedy algorithm requires updating scores of arbitrary elements after each step, and hence becomes intractable for large datasets. We give the first max cover algorithm designed for today's large-scale commodity clusters. Our algorithm has provably almost the same approximation as greedy, but runs much faster. Furthermore, it can be easily expressed in the MapReduce programming paradigm, and requires only polylogarithmically many passes over the data. Our experiments on five large problem instances show that our algorithm is practical and can achieve good speedups compared to the sequential greedy algorithm.

Apache HAMA

Apache Hama is a distributed computing framework based on BSP (Bulk Synchronous Parallel) computing techniques for massive scientific computations (e.g., matrix, graph, network, ..., etc), currently being incubated as one of the incubator project by the Apache Software Foundation.

Paper

http://salsahpc.indiana.edu/CloudCom2010/papers.html

DisCo: Distributed Co-clustering with Map-Reduce: A Case Study towards Petabyte-Scale End-to-End Mining

PDF http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.163.1677&rep=rep1&type=pdf

Huge datasets are becoming prevalent; even as researchers, we now routinely have to work with datasets that are up to a few terabytes in size. Interesting real-world applications produce huge volumes of messy data. The mining process involves several steps, starting from pre-processing the raw data to estimating the final models. As data become more abundant, scalable and easy-to-use tools for distributed processing are also emerging. Among those, Map-Reduce has been widely embraced by both academia and industry.

[StreamGraph] PEGASUS (Peta Graph Mining System)

Paper URL ->

http://www.cs.cmu.edu/~ukang/papers/PegasusICDM2009.pdf

PEGASUS: A Peta-Scale Graph Mining System - Implementation and Observations

In this paper, we describe PEGASUS, an open source Peta Graph Mining library which performs typical graph mining tasks such as computing the diameter of the graph, computing the radius of each node and finding the connected components. As the size of graphs reaches several Giga-, Tera- or Peta-bytes, the necessity for such a library grows too. To the best of our knowledge, PEGASUS is the first such library, implemented on the top of the HADOOP platform, the open source version of MAPREDUCE. Many graph mining operations (PageRank, spectral clustering,diameter estimation, connected components etc.) are essentially a repeated matrix-vector multiplication. In this paper we describe a very important primitive for PEGASUS, called GIM-V (Generalized Iterated Matrix-Vector multiplication). GIM-V is highly optimized, achieving (a) good scale-up on the number of available machines (b) linear running time on thenumber of edges, and (c) more than 5 times faster performance over the non-optimized version of GIM-V. Our experiments ran on M45, one of the top 50 supercomputers in the world. We report our findings on several real graphs, including one of the largest publicly available Web Graphs, thanks to Yahoo!, with 6,7 billion edges.

2010年11月5日金曜日

MapReduce Online

MapReduce is a popular framework for data-intensive distributed computing of batch jobs. To simplify fault tolerance, the output of each MapReduce task and job is materialized to disk before it is consumed. In this paper, we propose a modified MapReduce architecture that allows data to be pipelined between operators. This extends the MapReduce programming model beyond batch processing, and can reduce completion times and improve system utilization for batch jobs as well. We present a modified version of the Hadoop MapReduce framework that supports online aggregation, which allows users to see "early returns" from a job as it is being computed. Our Hadoop Online Prototype (HOP) also supports continuous queries, which enable MapReduce programs to be written for applications such as event monitoring and stream processing. HOP retains the fault tolerance properties of Hadoop, and can run unmodified user-defined MapReduce programs.

[StreamScale] ZooKeeper: Wait-free coordination for Internet-scale systems

ZooKeeper: Wait-free coordination for Internet-scale systems

Authors:Hunt, P.; Konar, M.; Junqueira, F.P.; Reed, B.

Source: USENIX Annual Technology Conference (2010)

Abstract: In this paper, we describe ZooKeeper, a service for coordinating processes of distributed applications. Since ZooKeeper is part of critical infrastructure, ZooKeeper aims to provide a simple and high performance kernel for building more complex coordination primitives at the client. It incorporates elements from group messaging, shared registers, and distributed lock services in a replicated, centralized service. The interface exposed by Zoo- Keeper has the wait-free aspects of shared registers with an event-driven mechanism similar to cache invalidations of distributed file systems to provide a simple, yet powerful coordination service. The ZooKeeper interface enables a high-performance service implementation. In addition to the wait-free property, ZooKeeper provides a per client guarantee of FIFO execution of requests and linearizability for all requests that change the ZooKeeper state. These design decisions enable the implementation of a high performance processing pipeline with read requests being satisfied by local servers. We show for the target workloads, 2:1 to 100:1 read to write ratio, that ZooKeeper can handle tens to hundreds of thousands of transactions per second. This performance allows ZooKeeper to be used extensively by client applications.

[StreamScale] S4: Distributed Stream Computing Platform

http://labs.yahoo.com/node/476

S4: Distributed Stream Computing Platform

Authors: Neumeyer L, Robbins B, Nair A, Kesari A

Abstract: S4 is a general-purpose, distributed, scalable, partially fault-tolerant, pluggable platform that allows programmers to easily develop applications for processing continuous unbounded streams of data. Keyed data events are routed with affinity to Processing Elements (PEs), which consume the events and do one or both of the following: (1) emit one or more events which may be consumed by other PEs, (2) publish results. The architecture resembles the Actors model [1], providing semantics of encapsulation and location transparency, thus allowing applications to be massively concurrent while exposing a simple programming interface to application developers. In this paper, we outline the S4 architecture in detail, describe various applications, including real-life deployments. Our de- sign is primarily driven by large scale applications for data mining and machine learning in a production environment. We show that the S4 design is surprisingly flexible and lends itself to run in large clusters built with commodity hardware.

2010年11月4日木曜日

2010年11月2日火曜日

[StreamGraph] グラフベンチマーク Graph 500

Web Page: http://www.graph500.org/

仕様

http://www.graph500.org/spec.html

実装 v1.2

http://www.graph500.org/downloads.html

Data intensive supercomputer applications are increasingly important HPC workloads, but are ill suited for platforms designed for 3D physics simulations. Current benchmarks and performance metrics do not provide useful information on the suitability of supercomputing systems for data intensive applications. A new set of benchmarks is needed in order to guide the design of hardware architectures and software systems intended to support such applications and to help procurements. Graph algorithms are a core part of many analytics workloads. Backed by a steering committee of over 30 international HPC experts from academia, industry, and national laboratories, Graph 500 will establish a set of large-scale benchmarks for these applications. This BOF will unveil the first Graph 500 list, and discuss both today's Graph 500 benchmark and the evolution of that benchmark going forward. The Graph 500 steering committee is in the process of developing comprehensive benchmarks to address three application kernels: concurrent search, optimization (single source shortest path), and edge-oriented (maximal independent set). Further, we are in the process of addressing five graph-related business areas: Cybersecurity, Medical Informatics, Data Enrichment, Social Networks, and Symbolic Networks. The BOF will offer a forum for community and provide a rallying point for data intensive supercomputing problems, and follows the introduction of the Graph 500 at ISC2010. This is the first serious approach to complement the Top 500 with data intensive applications. Additionally, we are working with the SPEC committee to include our benchmark in their CPU benchmark suite. We anticipate the list will rotate between ISC and SC in future years.The Graph 500 was announced at ISC2010 and the first list will appear at SC2010.

2010年11月1日月曜日

[StreamXML] 関連論文

http://www.kde.cs.tsukuba.ac.jp/~vjordan/docs/

Regular Expression Matching on Graphics Hardware for Intrusion Detection

http://portal.acm.org/citation.cfm?id=1691157

Evaluating GPUs for Network Packet Signature Matching

http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.153.4770

iNFAnt: NFA Pattern Matching on GPGPU Devices

http://netgroup.polito.it/Members/niccolo_cascarano/pub/cuda-02-2010.pdf

Research into GPU accelerated pattern matching for applications in computer security

http://www.cosc.canterbury.ac.nz/research/reports/HonsReps/2009/hons_0905.pdf

High-throughput stream categorization and intrusion detection on GPU

http://ieeexplore.ieee.org/Xplore/login.jsp?url=http://ieeexplore.ieee.org/iel5/5550962/5558619/05558648.pdf%3Farnumber%3D5558648&authDecision=-203

Small-ruleset regular expression matching on GPGPUs

http://portal.acm.org/citation.cfm?id=1810130

SQL/XML-IMDBg: A GPU In-Memory Database and Query Co-Processor

http://www.slideshare.net/NVIDIA/1105-gtc09

2010年10月28日木曜日

[StreamGraph] 超大規模ネットワークの高速コミュニティ検知手法

[StreamGraph] グラフアルゴリズムのベンチマーク

Benchmark graphs for testing community detection algorithms, Andrea Lancichinetti et.al , 2008

http://pre.aps.org/abstract/PRE/v78/i4/e046110

2010年10月23日土曜日

[StreamGraph] Networks, Crowds, and Markets: Reasoning About a Highly Connected World

2010年10月22日金曜日

[StreamScale] 通信レイヤ

MINA is a simple yet full-featured network application framework which provides:

Unified API for various transport types:

TCP/IP & UDP/IP via Java NIO

Serial communication (RS232) via RXTX

In-VM pipe communication

You can implement your own!

Filter interface as an extension point; similar to Servlet filters

Low-level and high-level API:

Low-level: uses ByteBuffers

High-level: uses user-defined message objects and codecs

Highly customizable thread model:

Single thread

One thread pool

More than one thread pools (i.e. SEDA)

Out-of-the-box SSL · TLS · StartTLS support using Java 5 SSLEngine

Overload shielding & traffic throttling

Unit testability using mock objects

JMX managability

Stream-based I/O support via StreamIoHandler

Integration with well known containers such as PicoContainer and Spring

Smooth migration from Netty, an ancestor of Apache MINA.

[StreamScale] DB

- Apache Derby : RDB (Pure Java)

- Apache MINA : 効率的な通信レイヤの実装用

- APR (Apache Portable Runtime Project)

- Cassandra (http://cassandra.apache.org/)

2010年10月19日火曜日

[StreamScale] Java と Infiniband

Efficient Java Communication Libraries over InfiniBand

Guillermo L. Taboada

http://www.computer.org/portal/web/csdl/doi/10.1109/HPCC.2009.87

This paper presents our current research efforts on efficient Java communication libraries over InfiniBand. The use of Java for network communications still delivers insufficient performance and does not exploit the performance and other special capabilities (RDMA and QoS) of high-speed networks, especially for this interconnect. In order to increase its Java communication performance, InfiniBand has been supported in our high performance sockets implementation, Java Fast Sockets (JFS), and it has been greatly improved the efficiency of Java Direct InfiniBand (Jdib), our low-level communication layer, enabling zero-copy RDMA capability in Java. According to our experimental results, Java communication performance has been improved significantly, reducing start-up latencies from 34μs down to 12 and 7μs for JFS and Jdib, respectively, whereas peak bandwidth has been increased from 0.78 Gbps sending serialized data up to 6.7 and 11.2 Gbps for JFS and Jdib, respectively. Finally, it has been analyzed the impact of these communication improvements on parallel Java applications, obtaining significant speedup increases of up to one order of magnitude on 128 cores.

[StreamScale] 機能

エンジン (SSR)

- データレート、CPU 使用率の計測

可視化ツール

- ストリームモニタリングツール(ちゃんとタプルが流れているかどうかを可視化する)

- どのオペレータがどのノードに今アサインされているかを可視化

(最初はテキストベースでも良いでしょうが、ないと不便でしょう)

[StreamScale] SSR (StreamScale Runtime) のリモート起動

リモートの JVM の監視ツールとしては以下のようなツールも存在する。

jps - Java Virtual Machine Process Status Tool

http://download.oracle.com/javase/6/docs/technotes/tools/share/jps.html

[StreamScale] 実装時に参考になるであろう情報

http://www.ibm.com/developerworks/library/j-zerocopy/

[StreamScale] 設計

第1回目: Programming Model / API (2010/10/13)

第2回目: Runtime Architecture (2010/10/18)

第3回目: 第1回目、第2回目のまとめ、他に必要な機能の議論

詳細設計:2週間程度 (11月1週目、2週目)

- API および SPI 決定

実装(第1ラウンド:11月中旬~12月下旬)

- 分担

- テストケース: Test First の思想に基づく

Agile な開発方式をするので、

まず第一プロトタイプを年内に完成させるのが目標

2010年10月14日木曜日

PGAS 2010

以下が、採択された論文リストですが、まだダウンロードはできません。いくつか興味深い論文があります。

http://groups.google.com/group/pgas10/web/list-of-accepted-papers

The following papers (listed in no particular order) have been accepted for the conference:

Mads Ruben Burgdorff Kristensen and Brian Vinter. Numerical Python for Scalable Architectures

Vikas Aggarwal, Changil Yoon, Alan George, Herman Lam and Greg Stitt. Performance Modeling for Multilevel Communication in SHMEM+

Stefano Markidis and Giovanni Lapenta. Development and performance analysis of a UPC Particle-in-Cell code

Filip Blagojevic, Paul Hargrove, Costin Iancu and Kathy Yelick. Hybrid PGAS Runtime Support for Multicore Nodes

Megan Vance and Peter M. Kogge. Introducing mNUMA: An Extended PGAS Architecture

Nakao Masahiro, Lee Jinpil, Boku Taisuke and Sato Mitsuhisa. XcalableMP Implementation and Performance of NAS Parallel Benchmarks

Deepak Eachempati, Hyoung Joon Jun and Barbara Chapman. An Open-Source Compiler and Runtime Implementation for Coarray Fortran

Max Billingsley III, Beth Tibbitts and Alan George. Improving UPC Productivity via Integrated Development Tools

Nicholas Edmonds, Douglas Gregor and Andrew Lumsdaine. Extensible PGAS Semantics for C++

Montse Farreras and George Almasi. Asynchronous PGAS runtime for Myrinet networks

Han Dong, Shujia Zhou and David Grove. X10-Enabled MapReduce

Jithin Jose, Miao Luo, Sayantan Sur and Dhabaleswar K. Panda. Unifying UPC and MPI Runtimes: Experience with MVAPICH

Bill Scherer, Laksono Adhianto, Guohua Jin, John Mellor-Crummey and Chaoran Yang. Hiding Latency in Coarray Fortran 2.0

MapReduce 関係の最適化

http://personals.ac.upc.edu/dcarrera/papers/ICPP09.pdf

Phoenix Rebirth: Scalable MapReduce on a Large-Scale Shared-Memory System, Richard M. Yoo

http://csl.stanford.edu/~christos/publications/2009.scalable_phoenix.iiswc.pdf

Phoenix Project at Stanford University

http://mapreduce.stanford.edu/

Phoenix Project Slides

Deduce : 前のエントリ

2010年10月8日金曜日

オープンソースの LP Solver

COIN-ORプロジェクトのCLP: https://projects.coin-or.org/Clp

GNUのGLPK http://www.gnu.org/software/glpk/

性能比較: http://plato.asu.edu/ftp/lpfree.html

近況

最近は、来年度に向けた政府系予算取りに向けたプロポーザル作成に忙殺されています。採択された暁には、皆さんにご報告します。

10月下旬に行われるインターネットカンファレンス 2010 (査読つき)には、上野君、西井君、石井君の3人が採択されました。また、国際学会 DASFAA 2011 には西井君と松浦君が論文を投稿しました。

授業など忙しいと思いますが、やはり、論文、というのは単位や学士・修士論文以上に、個人の業績として一生残るものです。研究を大いに進めて、自分の大学での研究成果を積み重なっていってください。

2010年9月3日金曜日

[StreamGraph] Proximity Tracking on Time-Evolving Bipartite Graphs

http://www.siam.org/proceedings/datamining/2008/dm08_64_Tong.pdf

Given an author-conference network that evolves over time,

which are the conferences that a given author is most closely

related with, and how do they change over time? Large

time-evolving bipartite graphs appear in many settings, such

as social networks, co-citations, market-basket analysis, and

collaborative filtering.

Our goal is to monitor (i) the centrality of an individual

node (e.g., who are the most important authors?); and

(ii) the proximity of two nodes or sets of nodes (e.g., who

are the most important authors with respect to a particular

conference?) Moreover, we want to do this efficiently and

incrementally, and to provide “any-time” answers. We propose

pTrack and cTrack, which are based on random walk

with restart, and use powerful matrix tools. Experiments on

real data show that our methods are effective and efficient:

the mining results agree with intuition; and we achieve up to

15∼176 times speed-up, without any quality loss

[StreamGraph] グラフアルゴリズムの GPU による高速化

http://cvit.iiit.ac.in/papers/Pawan07accelerating.pdf

fast GPU algorithm for graph connectivity

http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=5470817

Collaborative Filtering with Temporal Dynamics

Collaborative Filtering with Temporal Dynamics

Authors:

Koren, Y.

Source:

KDD 2009, ACM, Paris, France (2009)

Abstract:

Customer preferences for products are drifting over time. Product perception and popularity are constantly changing as new selection emerges. Similarly, customer inclinations are evolving, leading them to ever redefine their taste. Thus, modeling temporal dynamics should be a key when designing recommender systems or general customer preference models. However, this raises unique challenges. Within the eco-system intersecting multiple products and customers, many different characteristics are shifting simultaneously, while many of them influence each other and often those shifts are delicate and associated with a few data instances. This distinguishes the problem from concept drift explorations, where mostly a single concept is tracked. Classical time-window or instance-decay approaches cannot work, as they lose too much signal when discarding data instances. A more sensitive approach is required, which can make better distinctions between transient effects and long term patterns. The paradigm we offer is creating a model tracking the time changing behavior throughout the life span of the data. This allows us to exploit the relevant components of all data instances, while discarding only what is modeled as being irrelevant. Accordingly, we revamp two leading collaborative filtering recommendation approaches. Evaluation is made on a large movie rating dataset by Netflix. Results are encouraging and better than those previously reported on this dataset.

Notes:

Won KDD'09 Best Research Paper award

2010年9月1日水曜日

[StreamGraph] Pregel: a system for large-scale graph processing

http://portal.acm.org/citation.cfm?doid=1807167.1807184

Many practical computing problems concern large graphs. Standard examples include the Web graph and various social networks. The scale of these graphs - in some cases billions of vertices, trillions of edges - poses challenges to their efficient processing. In this paper we present a computational model suitable for this task. Programs are expressed as a sequence of iterations, in each of which a vertex can receive messages sent in the previous iteration, send messages to other vertices, and modify its own state and that of its outgoing edges or mutate graph topology. This vertex-centric approach is flexible enough to express a broad set of algorithms. The model has been designed for efficient, scalable and fault-tolerant implementation on clusters of thousands of commodity computers, and its implied synchronicity makes reasoning about programs easier. Distribution-related details are hidden behind an abstract API. The result is a framework for processing large graphs that is expressive and easy to program.

有害 Tweet リアルタイム監視

http://japan.internet.com/busnews/20100831/5.html

"利用者のツイートが掲載される企業のキャンペーンページなどに無関係な宣伝や公序良俗に反するツイートが投稿された際は、リアルタイムでブロックしたり、メールでアラート"

"オプションで、ハッシュタグや一般キーワードの監視、商品購入を薦めるフレーズなどの自動ピックアップ機能"

2010年8月31日火曜日

DEDUCE: at the intersection of MapReduce and stream processing

DEDUCE: at the intersection of MapReduce and stream processing

Proceedings of the 13th International Conference on Extending Database Technology 2010

Vibhore Kumar(IBM)

http://portal.acm.org/citation.cfm?id=1739120

[StreamGPU] Singular Value Decomposition for Collaborative Filtering on a GPU

----

Singular Value Decomposition for Collaborative Filtering on a GPU, 2010

A collaborative ltering predicts customers' unknown preferences from known preferences. In a computation of the collaborative ltering, a singular value decomposition (SVD) is needed to reduce the size of a large scale matrix so that the burden for the next phase computation will be decreased. In this application, SVD means a roughly approximated factorization of a given matrix into smaller sized matrices. Webb (a.k.a. Simon Funk) showed an e ffective algorithm to compute SVD toward a solution of an open competition called "NetixPrize". The algorithm utilizes an iterative method so that the error of approximation improves in each step of the iteration. We give a GPU version of Webb's algorithm. Our algorithm is implemented in the CUDA and it is shown to be effi cient by an experiment.

http://iopscience.iop.org/1757-899X/10/1/012017/pdf/1757-899X_10_1_012017.pdf

---

Collaborative Filtering 関連の参考論文

A Survey of Collaborative Filtering Techniques, 2009

http://www.hindawi.com/journals/aai/2009/421425.html

Yunhong Zhou, Large-scale Parallel Collaborative Filtering forthe Netflix Prize

http://www.hpl.hp.com/personal/Robert_Schreiber/papers/2008%20AAIM%20Netflix/netflix_aaim08(submitted).pdf

2010年8月28日土曜日

戦略的高性能計算システム開発に関するワークショップ

http://www.open-supercomputer.org/workshop/

2010年8月19日木曜日

汎用並列分散処理基盤としての SPADE / System S

このような使われ方はむしろ迎合すべきもので、後者の計算モデルをバッチ型計算モデルと呼ぶとすると、データストリーム処理モデルと同様のプログラミングモデルとしてシステムを記述することができる利点があります。また、研究としては、このような考えを元に SPADE というデータフロー型のプログラミング言語を捉えると、以下の2つのアイデアが浮かんできます。

1.「SPADE プログラムから CPU/GPU (or Multi-GPU) 上で効率的に稼動するコードを生成する技術」

関連研究は以下の論文でMapReduce プログラムを GPU 上で稼動させる研究です。

Mars: A MapReduce Framework on Graphics Processors by Bingsheng He , Naga K. Govindaraju

2.「MapReduce プログラミングモデルを SPADE プログラムにコード変換する技術」

MapReduce プログラミングモデルで記述されたコードは Hadoop だけでなく、汎用の分散並列処理基盤としての System S 上で実行することができようになります。

2010年8月17日火曜日

Ricardo: Integrating R and Hadoop

Ricardo: Integrating R and Hadoop (URL)

Sudipto Das University of California, Santa Barbara, USA

Yannis Sismanis IBM Almaden Research Center, San Jose, USA

Kevin S. Beyer IBM Almaden Research Center, San Jose, USA

Rainer Gemulla IBM Almaden Research Center, San Jose, USA

Peter J. Haas IBM Almaden Research Center, San Jose, USA

John McPherson IBM Almaden Research Center, San Jose, USA

2010年8月16日月曜日

[StreamScale] 実装時の参考文献、参考技術

- Doug Lea による Java NIO を用いたサーバーサイド実装のスライド "Scalable IO in Java"

http://gee.cs.oswego.edu/dl/cpjslides/nio.pdf

- JDK 6 の NIO パッケージの新機能 http://download.oracle.com/javase/6/docs/technotes/guides/io/enhancements.html#6

java.nio.channels.SelectorProvider クラスの実装が、Linux Kernel 2.6 から導入された epoll システムコールを使用するように書き換えられている

- Distributed computing using Java: A comparison of two server designs

- NPTL (Native Posix Threading Library) : POSIX スレッドのアプリケーションをOS レベルで効率的に動作させる機能。Linux Kernel 2.6 以降から導入されたが、Java の Non-Blocking IO などの必要不可欠性が薄まりつつある可能性もある

[コンポーネント化]

- Apache Cocoon: Spring ベースのソフトウェアコンポーネント化ライブラリ。Matt Welsh の SEDA (Staged Event Driven Architecture) を踏襲. OSGi とも類似している

2010年8月10日火曜日

[StreamSR] 国際会議

DASFAA 2011 (投稿予定日:9月中旬, 12月10日採択通知)

ICME (International Conference on Multimedia & Expo) (投稿予定日:2011年1月)

http://www.icme2010.org/dates.html (ICME 2010)

DMS (International Conference on Distributed Multimedia Systems) (投稿予定日:2011年3月30日)

http://www.ksi.edu/seke/dms11.html

2010年8月9日月曜日

2010年8月6日金曜日

IBM SUR Award

2010年8月4日水曜日

SWoPP 2010

鈴村は BoF セッションでのパネリストとして参加。特に昨今、博士課程に進学することを非常に間違った解釈でとらえている人がいるので、そのような学生向けに、民間企業の研究所の立場としてお話ししました。前に3年生の授業で話したことがありますが、もし興味があったらいつでも話します。

2010年7月28日水曜日

データストリーム処理におけるメモリ消費量を考慮したジョブスケジューリング

実行時に動的に均衡を適正に保つ上で、いくつかの条件を満たす必要があるが、その例を次に示す。

- 溜めていたデータはロスしてはならない。すべての溜めていたデータは正しくマイグレーション先に送られる。マイグレーション先への負荷を最小限に留める。

- データは留め止めなく流れてくるため、上流のオペレータのバッファで滞留しないように、瞬間的にマイグレーションできなければならない

2010年7月26日月曜日

[StreamDS] 論文投稿完了

[StreamCloud] 関連論文

- Understanding Performance Interference of I/O Workload in Virtualized Cloud Environments (CLOUD2010-3007), Xing Pu

- Performance Measurements and Analysis of Network I/O Applications in Virtualized Cloud (CLOUD2010-3008), Yiduo Mei

- Integrating Resource Consumption and Allocation for Infrastructure Resources On-Demand (CLOUD2010-3010), Ying Zhang

- Mining Twitter in the Cloud: A Case Study (CLOUD2010-3014), Pieter Noordhuis

- Maximizing Cloud Providers' Revenues via Energy Aware Allocation Policies (CLOUD2010-3017), Michele Mazzucco

- Workload Migration into Clouds . Challenges, Experiences, Opportunities(CLOUD2010-3020), C. Ward

- Performance and Power Management for Cloud Infrastructures (CLOUD2010-3042), Hien Nguyen Van

- Optimal Resource Allocation in Clouds (CLOUD2010-3053), Fangzhe Chang

- Fault Tolerance Middleware for Cloud Computing (CLOUD2010-3009), Wenbing Zhao

- Using Cloud Technologies to Optimize Data-Intensive Service Applications (CLOUD2010-3003)

Dirk Habich

2010年7月14日水曜日

新たなデータストリーム処理系を作る動機

処理系の実装の動機は「System S のランタイム周りの制約から離れたい」というのが大まかな動機です。例えば、現在感じているだけでも以下のような制約があります。

(1) ランタイムの制御、特にスケジューラ周りに手を入れられなく、その周辺の研究に手を出しにくい。たとえば、今のスケジューラは CPU 使用率しか見ていませんが、メモリ使用率やリソース(データベースなど)の局所性を生かした作りになっていません. オペレータの最適配置問題を最適化問題に落として解こうと思っても、その結果をランタイムに反映できません

(2) 高信頼性(つまり必ずデータロスはない)を実現するデータストリーム処理系を実装するには、現在は、System S の外でその機構を作らないといけません。ランタイム自身にその機構を加えることができるはずです。

(3) 鈴村の SYSTOR 2010 で発表した論文では、入力データの自動分散化がスケーラビリティを出すには重要と書きましたが、System S でも強引にやればできますが、自然な形でランタイムでその機構が作れるはずです。

(4) システム自身が様々なパッケージに依存しており、「Write once, run anywhere」 ではありません. Amazon 上で動かしたり、TSUBAME などの巨大クラスタ上でスケーラビリティテストをやりたくてもできません。

実装言語は Javaにします. 性能は多少犠牲にしますが、プラットフォーム独立性や開発生産性およびメンテナンス性を考え, Java で実装します。特に金融のようなマイクロ秒で勝負するような処理系を作ることを目的にしていないため、レイテンシなりスループットが少し下がっても問題ないでしょう。また、GPU との親和性は当然悪くなり、JNI 経由呼び出しになります。その代わり、Python や Ruby, PHP と言ったスクリプト言語の処理系も JVM ベースのものが最近はあるため、UDOP に相当するようなユーザ定義オペレータをそれらのスクリプト言語でも記述することも当然可能になります。

ただ、最後に断っておきますが、当然、System S も併用していく予定です。ストリームマイニングやアルゴリズム周りの研究、そして GPU との統合は System S 上でも可能なはずです。

2010年7月12日月曜日

論文締め切り

2010年7月2日金曜日

「タブレットコンピューターとそれを取り巻く産業構造に係る最近の動向」

http://www.ipa.go.jp/about/NYreport/201006.pdf

2010年7月1日木曜日

[StreamTwitter] 2010年6月の Tweet 数解析

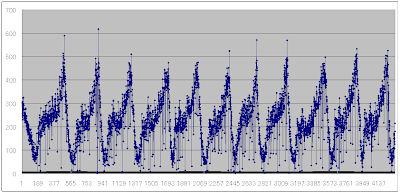

結果として面白いのは、20214番目のデータ(1単位は1分)で通常のピーク数よりも3倍の Tweet 数が見られ、Bursty なデータが見て取れるということです。20214番目は、6月14日あたりということですが、これはワールドカップで、日本とカメルーンが対戦した日です。

解析ですが、NFS がボトルネックとなるので、st01 のみの4コアで計測し、3時間程度で解析が終了しました。st01-st08 まで8台あるので、入力データ及び出力データをうまくローカルディスクを利用しながら解析すれば30分台で解析できるはずです。

以下は、そのバースト時が起きた時刻を中心とした1時間のデータ。

以下、6月10日から6月30日までのグラフ。バーストが何回か起きていることが見てとれる。

2010年6月30日水曜日

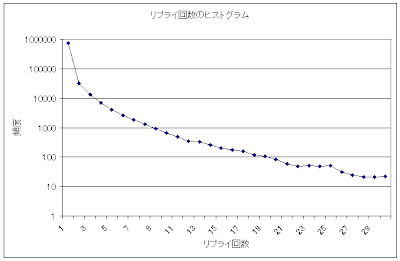

[StreamTwitter] べき乗則に従う Twitter のリプライ回数の頻度

2010年6月26日土曜日

Twitter ログ解析 4/1 から 4/26 の1分間単位の Tweet 数の変遷

2010年6月24日木曜日

講演@NEC 中央研究所

2010年6月23日水曜日

博士課程に進学するということ

ICDE 2010 勉強会

[論文紹介] Operator Placement Optimization

[論文紹介] High-Availability Algorithms for Distributed Stream Processing, ICDE 2005

- Precise Recovery: 一時的に処理の応答時間は増えるが、Failure を完全に排除し、結果にも影響を与えない。金融、証券系では必須

- Rollback Recovery: 入力データのデータリカバリまでは保証するが、出力する計算結果が完全に一致するとは限らない。プライマリとバックサーバーノードの2つがあり、バックサーバーで再処理させた場合に結果が異なることを許す

- Gap Recovery: 最もリカバリー保証がゆるい方式で、結果に影響を与えない古いデータは破棄し、ランタイムへのオーバーヘッドやリカバリーのスピードを高速化する方式

- Amenesia: ランタイムへのオーバーヘッドを与えずに Gap Recovery を実現

- Passive-Standby: プライマリはセカンダリ(バックアップ)に定期的にステートを送信

- Active-Standby: セカンダリはすべてのタプルを並行して処理

- Upstream backup: アプリケーション上の下流のノードが落ちた際には、上流のノードが、ログに一時的に保存しているタプルを再送信